OpenAI's ChatGPT Explained

Chatbots are nothing new. Odds are, you interact with this sort of Artificial Intelligence (AI) technology everyday. For example, when you click on the icon at the bottom right corner of your screen that says "How can I help you today" to figure out how to make a return on a website that seems impossible to navigate. Functions like these are typically designed to help facilitate interactions between organizations and their customers. But what if, instead of helping you navigate a website, a chatbot could answer any question, about anything? This is why people think ChatGPT is changing the game.

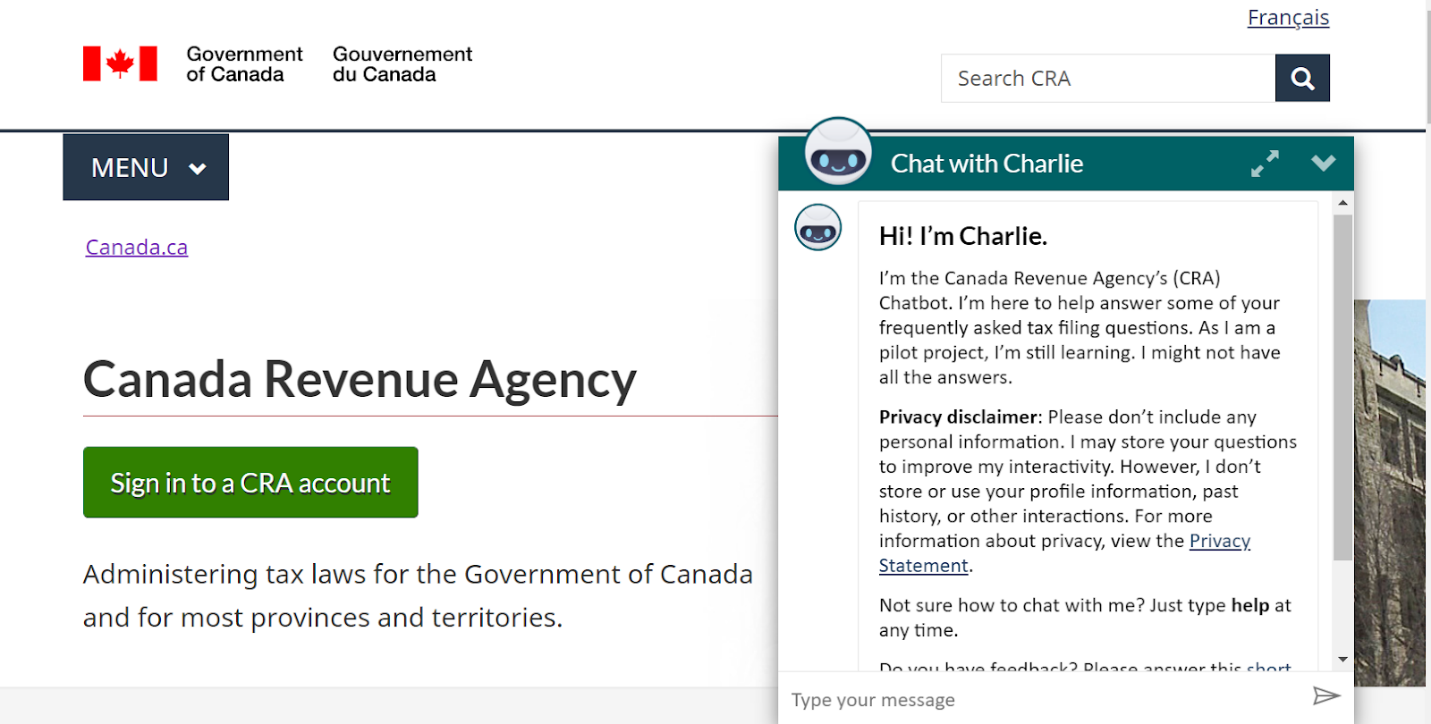

Text version

Screenshot of Canada Revenue Agency's homepage. On the left is "Canada Revenue Agency" in bolded text with a sign in button below. On the right is a pop up of the Canada Revenue Agency's chatbot named Charlie. The chatbot prompts visitors to type a message with what they are looking for.

The basics

Built by OpenAI, a California-based AI research organization, ChatGPT is an AI-powered chatbot programmed to produce original content, synthesize information, and generate realistic conversations with human users. The software was made available to the public on November 30, 2022 via OpenAI's and while it is still in the research phase, anyone can sign up and test it out for free.

ChatGPT uses Generative Pre-trained Transformer 3.5 (GPT 3.5) language technology to interpret and respond to user input. Using a brief prompt or seed words from the user, the program can generate almost anything in a matter of seconds – from intricate Python Code, to college-level essays, to songs and poetry. Responses generated by ChatGPT even passed the Google Coding Interview for a $183,000 USD/year engineer position.

That said, what can the program be used for and how does it work? We asked ChatGPT and these are the responses we got...

Prompt: In plain language, write what can ChatGPT be used for in 150 words or less.

ChatGPT can be used to build conversational applications such as chatbots, virtual assistants, and information retrieval systems. These applications can be used in a variety of ways, such as answering customer inquiries, providing product or service information, or providing personalized recommendations.

ChatGPT is trained on a large dataset of conversations and can generate natural-sounding responses that are context-aware and can be tailored to individual users. It can also be used to create interactive stories and games, as well as to simulate conversations between virtual agents. The applications of ChatGPT are virtually limitless, and it can help to make applications more engaging and user friendly.

Prompt: In simple terms, how does ChatGPT generate its responses?

ChatGPT uses a type of artificial intelligence called natural language processing (NLP) to generate its responses. NLP is a way for computer systems to understand, interpret, and generate human language. To generate responses, ChatGPT looks at the context of the conversation and generates an appropriate response based on its understanding of the conversation. It uses a pre-trained language model to generate the response. This model is trained on large datasets of conversational data to learn the patterns and nuances of natural language.

So, people can ask questions in natural language and get answers, which means the virtual agents and customer inquiries contexts make sense. But while it is modelled after a chatbot, the implications are broader. What if a newspaper editor asks it to "write an article about the fall outlook for the economy," or a student asks it to "write an essay about how the Treaty of Westphalia came to be"? It provides shockingly in-depth answers in those cases too - with a major caveat, which we'll get to. What about if a public servant asks it to "write a briefing note on trade issues"?

What does this mean?

The potential of mainstreamed, mature language models trained on massive datasets to disrupt and automate customer service, as well as generate creative content and insights into data has led to a lot of both excitement and fear.

Many people see the potential and value of automated content development. Some major web content providers are already quietly implementing AI writers. Others, however, see the risks and disruption: to the labour market for already often precarious employment, but also for a longer-term dehumanization of what we read and consume daily. Given that language models use internet content for training data, they could increasingly become a blurry echo of themselves.

Or, because the program produces original content, academic integrity could be threatened if students can generate essays on any topic.

Since the program operates and learns based on information that humans wrote, which may be biased, it is possible that the program may replicate these biases, resulting in outputs that are discriminatory. We've seen disastrous examples with other large-scale language models over the past years.

Bias can creep into algorithms in several ways. AI systems learn to make decisions based on training data, which can include biased human decisions or reflect historical or social inequities, even if sensitive variables such as gender, race, or sexual orientation are removed.

There is also a concern for the spread of misinformation and disinformation. While the technology is designed to learn from its mistakes and improve its responses over time, as well as challenge incorrect premises, ChatGPT does not yet have the capability to verify the accuracy of the information that it outputs. That is: where the training data is wrong it lies, both unknowingly and very confidently. And small changes in prompts can produce very different outputs.

ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.

This is the crucial thing: language models don't understand concepts the way humans do. Instead, they calculate statistical probabilities that a certain set of words and phrases should follow what came before.

And these areas of concern just scratch the surface. So, all of this means that ethics is a core issue for early thought, and constant monitoring, when it comes to ChatGPT and similar technologies is critical.

What now?

ChatGPT raises a lot of questions, but there's no dataset about the future. However, in a sea of noise about technological disruption, this leap forward definitely feels like a signal. Governments' capacity to conduct foresight about potential impacts, learn in lockstep with other sectors, and keep up to the pace of change are vital at times like these.

In the Government of Canada, the Directive on Automated Decision-Making, which came into effect on April 1, 2019, continues to guide GC use of such technologies. The Directive outlines the government's plan to integrate and evolve with AI, particularly regarding administrative decision-making processes and service delivery.

The Directive applies to automated decision systems used for decisions that impact the legal rights, privileges or interests of individuals or businesses outside of the government—for example, the eligibility to receive benefits, or who will be the subject of an audit.

However, it's a different question for the federal government's role as legislator and regulator. In the EU, the Artificial Intelligence Act has been proposed and work on responsible AI principles and frameworks is widespread globally. However, the ethical implications and increasingly widespread availability of AI tools may put pressure on governments to enter this space more quickly or forcefully.

Why do you need to know about this?

ChatGPT and similar AI technologies are likely here to stay, and they will impact the way we work and interact in society in one way or another. As they continue to advance and adoption becomes more widespread, knowledge, awareness, and the ability to work alongside these innovations will help you remain adaptable. It is all our responsibility to engage in continuous learning and pursue skills that will help us serve people better. (Canada's Digital Ambition and the Digital Standards are good guides towards this goal).

Nobody can predict the future, but it is safe to say that being prepared is key. Challenge yourself by leveraging the resources that are available to you to bolster your knowledge. You may even find ways to apply what you've learned and find innovative ways to integrate AI technologies into your work – ethically of course (and don't copy and paste protected, personal, or potentially sensitive text into online tools).

Before you go, some food for thought

- Have you read Canada's Digital Ambition and Canada's Digital Standards? How about the Directive on Automated Decision Making? In what ways can the adoption of modern technologies such as ChatGPT enhance – or challenge – the delivery of government in a digital age?

- How can staying informed about new technological innovations help you and the organization foster resilience?

- If you use AI in your work, what measures have you put in place to ensure that the technology is being used ethically and responsibly?

Resources